Augmenting workers with mixed reality

In this project, the goal is to develop a wizard interface based on mixed reality that allows workers not only to perform the task, but also to gain the needed feeling of control over the machine.

Factsheet

-

Schools involved

Bern Academy of the Arts

School of Engineering and Computer Science - Institute(s) Institute for Human Centered Engineering (HUCE)

-

Research unit(s)

HUCE / Laboratory for Computer Perception and Virtual Reality

Knowledge Visualization - Strategic thematic field Thematic field "Humane Digital Transformation"

- Funding organisation BFH

- Duration (planned) 01.10.2022 - 31.12.2022

- Head of project Prof. Dr. Sarah Dégallier Rochat

-

Project staff

Michael Flückiger

Mitra Gholami - Keywords Human-Machine Interface, Mixed Reality, New Skills

Situation

To face the growing versatility of the market, the means of production need to become more flexible. New kind of production systems, called anthropocentric manufacturing systems (AMS), are needed, where the worker is in control. The goal is to benefit from the efficiency of automation while keeping the flexibility of human work. Rather than a setup designed specifically for a given set of tasks, flexible robotic system shall be developed that can be easily adapted by non-expert workers to new tasks and workspaces. Tools are thus required that simplifies the interaction with the machine, but also help the worker to better understand how the system works.

Course of action

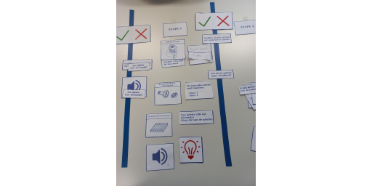

Our goal in this project is to develop a wizard interface based on mixed reality that allows workers not only to perform the task, but also to gain the needed feeling of control over the machine. A user-centered approach was taken : two workshops were organized with 10 operators from the company Bien Air S.A. The goal of the first workshop was to gain insights on the two following questions: (a) How much does the user want / need to understand about the system to be able to use it? (b) What are the modalities that would make the interaction more intuitive/enjoyable for the operators? The operators were asked to design their ideal interface based on cards showing different types of information. It was also possible for the operators to define their own cards. A low-fidelity prototype was then designed based on the outcomes of the first workshop. It was tested with think-out-loud protocol in the second workshop. It was tested against a traditional, text-based interface. The two interfaces were then evaluated based on the User Experience Questionnaire (UEQ) and the System Usability Scale (SUS). A short interview with the operators was also performed and transcripted for analysis.

Result

The results of the first workshop suggested that the operators prefer to work with a combination of images and texts. The images are important because some of them are not native French speakers. The text is particularly important at the beginning, when the user is not familiar with the interface, but it should be possible to hide it once the user is confident with the system. A low-fidelity interface was developed based on mixed reality. A schematic representation of the workspace was combined with images from the cameras. An overlay was added to the images to indicate to the user what the robot was seeing (that is, for instance, which pieces were correctly recognized and localized). A second prototype was developed based on a traditional, text-based wizard approach. In the second workshop, an A/B testing protocol was used where the operators were testing both interfaces in a random order. Both interfaces were evaluated with UEQ and SUS. A slight preference for the interface based on mixed reality could be shown. This was reflected in the interviews, where the operators showed a greater enthusiasm for the new interface.

Looking ahead

A high-fidelity prototype will be developed in the coming months and tested again with the same operators, this time with the real robot. It will be improved based on the feedback of the users and integrated in the production of the longitudinal study in the framework of the SNSF Project "Cobotics, digital skills and the re-humanization of the workspace" and the Innosuisse project "Agile rotobic system for high-mix low-volume production".